URL Parameters: What They Are and How to Use Them Properly

Written by Yongi Barnard

URL parameters are strings of text you add to a URL that let you track and organize your webpages. You add parameters to the end of URLs after a “?” symbol. They’re also known as query strings.

Here’s an example of a URL with tracking parameters at the end:

yourdomain.com/example-product/?utm_source=facebook&utm_medium=social&utm_campaign=promotionAnd here’s an example with sorting parameters:

yourdomain.com/example-product?size=8&color=blueBut what do these URL parameters actually do? And how do you use them properly?

That’s what I’ll cover in this post. Let’s start with how they work.

How Do URL Parameters Work?

URL parameters track user behavior or modify page content without the need for a separate URL for each variation.

No matter the goal, they all follow the same structure.

Structure and Functionality

URL parameters include key-value pairs formatted as “key=value”:

- The “key” is what you want to adjust or track (e.g., “color” or “utm_source”)

- The “value” is the specific setting or data for that parameter (e.g., “yellow” or “newsletter”)

(Note that you can have multiple values for the same key.)

Take, for example, this URL from a Facebook ad that tracks visitor interactions:

eu.huel.com/products/huel-black-edition?<span style="color: #08a008;"><strong>utm_source=fb</strong></span>&<span style="color: #2768e8;"><strong>utm_medium=cpc</strong></span>&<span style="color: #c6262e;"><strong>utm_campaign=ROE_ACQ_PRO_PURCHASES_7D-CLICK_JJ24_ASC</strong></span>Here’s a breakdown of its URL parameters using the key-value structure:

- utm_source=fb: “utm_source” is the key, denoting the origin of the website traffic. The “fb” part shows that the traffic is coming from Facebook

- utm_medium=cpc: “utm_medium” is the key, which stands for traffic medium, while “cpc” indicates the medium as cost-per-click (i.e., advertising)

- utm_campaign: “utm_campaign” is the key, which stands for the campaign name, while ”ROE_ACQ_PRO_PURCHASES_7D-CLICK_JJ24_ASC” is the name of the campaign set by the brand

Side note: When you have multiple parameters within a URL, you separate each key-value pair with an ampersand (“&”).

Types of URL Parameters

There are two types of URL parameters: active and passive.

Active Parameters

Active parameters change the content or functionality of a webpage dynamically, usually based on user input.

Here’s an example from our website:

backlinko.com/blog/search?query=digital+marketingThe parameter “?query=digital+marketing” ensures the on-site search page (/blog/search) shows results related to the search phrase—in this case, “digital marketing”:

Note: While we call these “active” parameters and say they modify pages, they’re not what’s actually causing or making the change to what’s on the screen.

If you don’t have corresponding functionality to show a yellow version of your product, just typing “/product?color=yellow” into the search bar won’t do anything. It’ll typically just take you to the canonical (primary) version of the page.

For example, we don’t have different variants of our keyword research tool page. So, adding parameters to the end of the URL won’t change anything about the way it looks or functions:

This is a small but important distinction. URL parameters don’t “do” anything on their own. You need to set your site up in a way that shows the right page or screen based on these parameters.

You can also use active parameters to tailor the content displayed to users. This could be to align it with their preferences or past behavior for a more personalized experience.

For example, Yelp uses URL parameters that correspond to the filters you apply. Like this:

https://www.yelp.ie/search?find_desc=Lunch&find_loc=New+York&attrs=OutdoorSeating%2CDogsAllowedIn this example, you can see parameter keys and values for:

- Meal: find_desc=Lunch

- Location: find_loc=New+York

- Features: attrs=OutdoorSeating%2CDogsAllowed

In this case, the “%2C” is the URL-encoded representation of the comma. This is because the key is “attrs” (corresponding to the “Features” filter), and we’ve selected two values:

This is useful for bookmarking results pages to come back to over time (e.g., to keep an eye on prices for specific areas). And it makes it easy to share results with others, as the URL with the parameters will take them to the same page with the same filters applied.

Passive Parameters

Passive URL parameters are used mainly for tracking and analytics. They don’t change a webpage’s content or functionality.

Here’s an example from a ClickUp ad:

clickup.com/compare/monday-vs-clickup?utm_source=google&utm_medium=cpc&utm_campaign=trial_all-devicesIn this example, the parameters following the “?” are passive. They collect data without modifying the site’s look or functionality.

In other words, you’ll see exactly the same page if you go to this URL instead:

clickup.com/compare/monday-vs-clickupAnalytics and advertising tools will often set these parameters automatically. In other words, you don’t necessarily need to type “utm_source=” into anything. And you usually won’t need to worry about the specific keys and values.

Instead, you’ll probably just interact with a more user-friendly interface where you set up things like campaign names, and the tool will automatically apply them as needed.

Note: URL parameters don’t offer a perfect tracking solution.

Imagine someone is writing an article about Monday vs. ClickUp, and their research takes them to the search results for that term. They click the ad, and think the page is a useful resource, so they include a link to it in their guide.

If they link to the parameterized version of the URL, ClickUp’s Google Ads account might inaccurately classify users that click on it as ad clicks. If this happens at scale, it can skew ad performance numbers.

While unlikely to be a massive issue, it’s worth being aware of the potential inaccuracies parameters can lead to.

Why Are URL Parameters Important for Marketers?

Beyond the technical role of URL parameters in building dynamic websites, they also help you analyze and refine your marketing campaigns.

You can use them to:

- Identify which channels drive traffic to your website

- Understand how visitors interact with your website

- Tailor content and user experiences based on visitor behavior

- Evaluate the performance and ROI of your marketing efforts

- Gauge the effectiveness of your influencer marketing campaigns

When Do URL Parameters Become an SEO Issue?

Google understands and reads URL parameters. This means it can index pages with parameters.

So, you must carefully manage your URL parameters to avoid potential complications.

URL parameters can present SEO issues regarding:

- Duplicate content

- Crawl budget waste

- Link equity distribution

- URL structure and usability issues

Let’s look at each of these in detail.

Duplicate Content Issues

When you use URL parameters to sort or filter items on a site, you may end up with multiple URLs that display nearly (or completely) identical content.

This can confuse search engines and cause duplicate content issues.

As a result, they may struggle to identify which version of a page to prioritize in search results.

This shouldn’t be a huge issue if you use proper canonicalization.

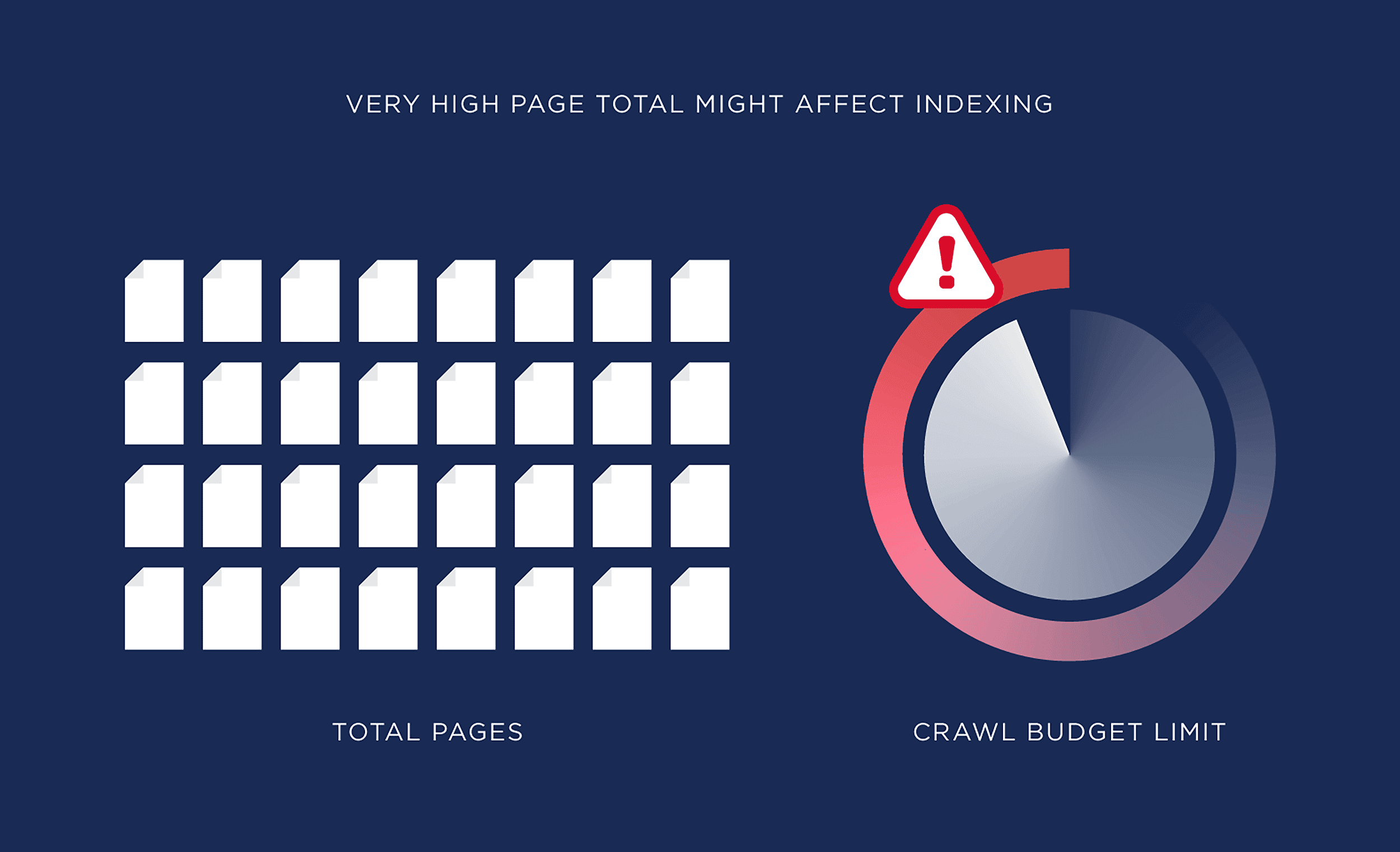

Crawl Budget Problems

With over a billion websites live on the Internet, there’s a limit on the amount of resources Google’s web crawlers can spend crawling any single website. In SEO, we often refer to this as a site’s crawl budget.

When a website has many URL parameter variations, it can result in crawl budget issues.

Essentially, the crawler might spend too much time crawling similar pages (the ones with the parameters), possibly ignoring other important content on the website.

This is usually only a potential issue for websites with thousands (or millions) of pages.

Link Equity Dilution

Links channel SEO value (or authority) from one page to another.

This SEO value, known as link equity (or “link juice”), can help improve your rankings.

However, when links point to various versions of the same URL, the link equity gets divided among those versions.

As a result, the full link equity potential is diluted because search engines see each URL as separate. Rather than recognizing them as pointing to the same content.

But like with duplicate content issues, using canonical tags can usually prevent this from being an issue.

Essentially, Google can work out what the main version of the page is, and the link equity is effectively all (or nearly all) transferred to that single URL.

URLs That Are Not SEO-Friendly

URLs with too many extra parameters can be fairly long and look awkward. Google’s URL structure best practices suggest keeping URL structures simple and easy to read—so it’s best to stick to that advice.

Google also often truncates long URLs in search results. The search engine typically shortens them anyway (if it shows them at all), but it’s still generally better to keep them fairly short to improve readability.

Further reading: How to Create SEO-Friendly URLs

Best Practices for URL Parameters

Follow these best practices to make sure that URL parameters don’t negatively impact your rankings or user experience:

1. Use Canonical Tags

Help search engines identify the original version of a webpage by using canonical tags.

Look at this Inboxally URL from a Reddit ad with parameters added to the base URL:

inboxally.com/<strong>?rdt_cid=4345946952391789066&utm_campaign=getoutofspam&utm_medium=cpc&utm_source=reddit</strong>Now, take a look at the page’s HTML code. You’ll see it uses canonical tags:

These tags point search engines to the original version of the page, so they’ll index that one and ignore other variations.

2. Limit the Use of URL Parameters

Keep your URLs clean and simple, and avoid using URL parameters unless they’re absolutely necessary.

For example:

It’s possible to use URL parameters when you have multiple language versions of your pages, like so:

https://www.yourwebsite.com?loc=esBut this is not the recommended way to do it.

Instead of using URL parameters, you can do this with subdomains:

https://es.semrush.com/Or with subdirectories:

https://slack.com/intl/es-es3. Use Robots.txt and Meta Tags Carefully

Your robots.txt file helps you manage which pages on your website search engines are allowed to crawl. You can use it to block search engines from accessing URLs with unnecessary parameters.

The lines you’d add to your file would be something like:

User-agent: *

Disallow: /*?*But you can get more specific with the parameters you block. For example, here’s what Google’s robots.txt file looks like:

This can be useful for very large sites, like ecommerce stores, that want to minimize crawl budget waste. But it can lead to unintended consequences.

For example, if a site links to your site using a parameterized URL (e.g., they found the page by clicking on one of your ads with parameters), no SEO value would pass down to your site if you block these URLs via robots.txt.

So, there are tradeoffs to be aware of.

You can also block indexing (not crawling) with the “noindex” meta tag. But this can be tough to do correctly at scale.

Generally, it’s best to just ensure you use proper canonicalization.

4. Use User-Friendly URLs

Prioritize clear, descriptive, and short URLs. Avoid using lengthy and complex ones like:

https://www.yourwebsite.com/products?category=laptops&id=1234Instead, use simple, path-based URLs such as:

https://www.yourwebsite.com/laptops/dell-latitude-550Important: Changing your site structure is a big job. And it’s easy to make mistakes that can have disastrous consequences. Even if you do everything “right,” it can still drastically hurt your rankings in the short and potentially long term.

So, it’s best to implement user-friendly URLs going forward, rather than making changes to hundreds, thousands, or potentially millions of URLs at once.

5. Monitor and Audit Your Website Regularly

Conducting regular site audits can help you spot issues that URL parameters might cause.

You can set up regular website audits with tools like Semrush’s Site Audit.

Just enter your domain and click the “Start Audit” button.

You’re able to modify a number of settings for the audit, including crawl scope, specific URLs to include or exclude from the audit, crawl source, and more.

In most cases, you can stick with the default settings and click on “Start Site Audit.”

Once the audit is complete, you’ll be able to see a detailed report outlining all the discovered issues.

But you want to head to the “Issues” tab and search for “url”:

Specifically, pay attention to issues concerning canonical URLs and the “too many parameters” warning.

Note: A free Semrush account lets you audit up to 100 URLs. Or you can use this link to access a 14-day trial on a Semrush Pro subscription.

6. Optimize Your Sitemap

Use your sitemap to tell Google about your most important URLs.

Include only the canonical URLs, which are the primary versions of the pages you want indexed.

Remove any unnecessary URLs with tracking parameters, or any other parameterized URLs that you don’t want Google to index.

(This won’t guarantee that Google won’t index them, but including them in your sitemap is a strong hint that you DO want them indexed. So it’s best to omit them.)

Finally, submit your updated sitemap through Google Search Console.

Are URL Parameters Harming Your Website’s Rankings?

URL parameters can inadvertently create duplicate content and potentially create crawl budget issues. Both of these can affect your site’s performance in search.

The best way to know if this is the case?

Run a website audit.

Use our SEO Checker and SEO audit template to guide you through this process, and use Semrush’s Site Audit tool to quickly find issues at scale.

Backlinko is owned by Semrush. We’re still obsessed with bringing you world-class SEO insights, backed by hands-on experience. Unless otherwise noted, this content was written by either an employee or paid contractor of Semrush Inc.